DE-PROSECUTION AND DEATH

A Cordial Reply to Kaplan, Naddeo & Scott

DE-PROSECUTION AND DEATH:

A CORDIAL REPLY TO KAPLAN, NADDEO & SCOTT

Thomas P. Hogan*

Summary

I engaged in an original study study exploring the potential causal link between the policy of de-prosecution and homicides in Philadelphia. Using the traditional synthetic control method, the model estimates that de-prosecution is associated with a statistically significant increase of 74.79 homicides per year in Philadelphia during 2015-19. A response criticized these results, claiming to have “flipped” the results using an augmented synthetic control method to show that de-prosecution is associated with a reduction of 46.22 homicides per year in Philadelphia, even as homicides in Philadelphia were steadily rising in the real world. Unfortunately, the response is based on critical and obvious errors in data, such as claiming that New York City cleared 0% of its homicides from 2010-12 (and numerous other equally obvious instances of incorrect data applied to both donor cities and the treatment city). When the data flaws are removed, the original results are shown to be robust using both traditional and augmented synthetic control methods. The response is otherwise notable for independently replicating the results of the original study and performing various robustness tests that act as further validation that de-prosecution is having a large and statistically significant impact on increasing homicides in Philadelphia.

Policy Implications

Statistical critiques should be tempered by considering real-life facts and accurate data. While there is no unqualifiedly perfect way of estimating the impact of de-prosecution on homicides in Philadelphia, the careful and conservative application of statistical techniques supports a strong inference that the policy of wide-scale de-prosecution across felonies and misdemeanors is producing a large increase in homicides in Philadelphia. Practitioners in the criminal justice system should adjust their strategies to reflect this finding.

Key words: de-prosecution, homicide, synthetic control, data error, reverse p-hacking.

Declarations of interest: none.

*Mr. Hogan has served as a federal prosecutor, local prosecutor, elected district attorney, and criminal defense lawyer. He received his undergraduate degree from Dartmouth College, a Master of Science in Criminology from the University of Pennsylvania, and his Juris Doctor from the University of Virginia School of Law.

1 | INTRODUCTION

In De-Prosecution and Death: A Synthetic Control Analysis of the Impact of De-Prosecution on Homicides (Hogan, 2022) (hereinafter, the “De-Prosecution Article”), I examined the potential causal link between the policy of de-prosecution and homicides in Philadelphia. De-prosecution is the decision not to prosecute certain crimes, regardless of the underlying evidence. Philadelphia was a logical place to study this issue, as the city experienced a large drop in prosecutions while holding other conditions static. Sentencings were reduced by approximately 70% from 2014 to 2019, covering substantial drops in both misdemeanors (e.g., drug possession, retail theft) and felonies (e.g., drug trafficking, weapons offenses, robbery, burglary). At the same time, homicides were rising dramatically. Homicides were at a low of 248 deaths in 2014, rising to 356 by 2019, then continuing to a new all-time record of 561 in 2021. After reviewing descriptive statistics, I used the synthetic control method to estimate a potential causal effect of de-prosecution on homicides in Philadelphia, using a pre-period of 2010-14 and a post-period of 2015-19. The potential donor pool was comprised of the other 99 largest cities in the United States, and standard variables were included in the model. The results showed a statistically significant increase of 74.79 homicides per year in Philadelphia associated with the de-prosecution policy.

A response has been publicly posted criticizing the results of the De-Prosecution Article, entitled De-Prosecution and Death: A Comment on the Fatal Flaws in Hogan (2022) (Kaplan et al., 2022) (hereinafter, the “Kaplan Response”). While not peer reviewed, the Kaplan Response criticizes the data, methods, and results from the De-Prosecution Article.[1]

There are three salient points in replying to the Kaplan Response. First, when using accurate data, the Kaplan Response precisely and independently replicates the findings of the De-Prosecution Article. Second, even when using inaccurate data (e.g., knowingly undercounting Philadelphia homicides) and improper methods (e.g., causing overfitting by using rates), the Kaplan Response repeatedly confirms that de-prosecution is associated with a large increase in homicides in Philadelphia. Third, the Kaplan Response relies on flawed data and methodological errors in an attempt to challenge the results of the De-Prosecution Article, leading to results which are illogical, incorrect, and remarkably contrary to reality. In particular, the Kaplan Response published a puzzling augmented synthetic control model estimating that Philadelphia’s de-prosecution policy is associated with reducing homicides by 46.22 per year, in the face of ever-increasing homicides in that city. Regarding the latter claimed result, the authors of the response committed a massive data error; for instance, the Kaplan Response used data claiming that the donor city New York cleared zero homicides in the 2010-12 time period and over 100% of homicides in 2017, in addition to multiple other obvious data errors that drove their flawed result. The authors of the Kaplan Response failed adequately to assemble, clean, and check their critical data. Once the large data errors created by the Kaplan Response are corrected, the original results of the De-Prosecution Article remain robust, whether using the traditional or augmented synthetic control algorithm.

2 | INDEPENDENT REPLICATION BY THE KAPLAN RESPONSE

Perhaps the most important contribution of the Kaplan Response is that it independently replicates the main findings of the De-Prosecution Article. The original study relied upon publicly available data sources regarding homicides, population, income, and homicide clearances, plus a unique taxonomy of the prosecutors’ offices for the 100 largest American cities (which was published in the De-Prosecution Article). In the Kaplan Response, the authors independently compiled the same publicly available data sources and re-ran the synthetic control model using their own code. The results were an almost exact replication of the main findings of the De-Prosecution Article. The De-Prosecution Article estimates a difference-in-differences (“DiD”) increase of 74.79 homicides per year (p=0.012) associated with the de-prosecution policy. The Kaplan Response estimates a DiD increase of 74.25 homicides per year (p=0.015). Thus, the authors of the Kaplan Response have succeeded in independently replicating the results of the De-Prosecution Article, without being biased by shared data or code (Kaplan et al., 2022).[2] This is a valuable validation of the findings from the De-Prosecution Article.

The Kaplan Response also attempts to challenge the results of the De-Prosecution Article using data that undercounts Philadelphia homicides. Researchers who are familiar with Philadelphia homicide data and the FBI’s Supplemental Homicide Report (SHR) know that Philadelphia is a city which misreports its homicide data to the FBI. For instance, in 2019, Philadelphia reported 265 homicides to the SHR, while the actual number recorded by the Philadelphia Police Department was 356 homicides; for 2020, the SHR reflects 201 homicides for Philadelphia, while the police officially recorded 499 homicides (Kaplan, 2021; Philadelphia Police Department, 2022).[3] The Kaplan Response attempts to model the synthetic control results relying solely on the incorrect SHR data, knowingly undercounting Philadelphia homicides by a significant factor. Despite the use of incorrect data, the results show a DiD increase of 49 homicides per year for Philadelphia (p=0.055). Thus, even when undercounting Philadelphia homicide data, the Kaplan Response shows a large increase in homicides associated with de-prosecution in Philadelphia, within a rounding error of statistical significance at the p<.05 level (Kaplan et al., 2022). The Kaplan Response is literally hiding dead bodies, a well-respected tactic in criminal circles, but a disfavored practice in law enforcement and academics.[4]

In addition to replicating the original study, another interesting aspect of the Kaplan Response is the large number of issues that are conceded. The authors do not challenge that the Philadelphia DAO has engaged in de-prosecution across a broad array of crimes, including felonies and misdemeanors. The response likewise concedes that homicides have been rapidly increasing in Philadelphia during the tested post-period. The response admits that the trends for most traditional and moderate prosecution cities remained relatively flat or declining for homicides during the tested period. The response does not contest that the background factors in Philadelphia were stable regarding economics, population, and political party control of the city, with no major incidents such as the Michael Brown shooting in Ferguson or the George Floyd killing in Minneapolis. The response concedes that the classification of prosecutors is correct.[5] Finally, the response acknowledges that the synthetic control model is the best method for testing the impact of de-prosecution on homicides, given available data.

Thus, the Kaplan Response precisely replicates the original study, even showing strong results when undercounting homicides in the treatment city, and concedes the accuracy of previously unreported descriptive data. With all of these basic concessions on major facts and results, the Kaplan Response then chooses remarkably small issues to misstate or misunderstand, except for their critical data error discussed below.

3 | THE KAPLAN RESPONSE’S CLAIM TO HAVE “FLIPPED” THE RESULTS OF THE ORIGINAL STUDY IS BASED ON FLAWED METHODOLOGY AND A CRITICAL DATA ERROR

The Kaplan Response claims that the original study should have used an augmented synthetic control method, and that application of this method “flips” the direction of the effect on homicides, rendering the original results null (Kaplan et al., 2022). The results of the Kaplan Response are based on a flawed methodological premise and a startlingly large data error. Once this data error is corrected, the original results of the De-Prosecution Article remain robust. The Kaplan Response’s methodological mistake and data error are discussed below.

3.1 | THE FLAWED PREMISE OF THE KAPLAN RESPONSE

The Kaplan Response replaces the traditional synthetic control model used in the De-Prosecution Article with an augmented synthetic control model, intended to be used when the classic model results in a poor pre-period match (Abadie & L’Hour, 2021). The Kaplan Response maintains that use of the augmented synthetic control model is necessary because of “the arguably poor pre-intervention fit in the author’s main SCM analysis” (Kaplan et al., 2022).

On this point, the Kaplan Response is factually incorrect. The mean difference in the pre-intervention period between synthetic and real Philadelphia is -0.165 homicides. To put this into context, the mean number of homicides per year in Philadelphia during the pre-period was 291.4. The divergence is minimal.[6] As specified in the De-Prosecution Article, the normalized root mean square error for that pre-period comparison is 0.05387. This is a classic case where the pre-period fit is strong and the traditional synthetic control method applies.

For an excellent analogy to the De-Prosecution Article, one may refer to the canonical Abadie et al. (2010) study applying the synthetic control model to estimating the potential causal impact of California’s Proposition 99, imposing a tax on the purchase of cigarettes. Abadie et al. (2010) used California as the treatment state, with the other 49 states as a potential donor pool. They disqualified certain states as potentially exposed to the same treatment, leaving a donor pool of 38 states. The study used four predictors: price of cigarettes, income, percentage of population aged 15-24, and per capita beer consumption (augmented by three years of lagged smoking consumption). Applying the traditional synthetic control method, the trends showed a statistically significant divergence between synthetic California and real California. There was some small pre-intervention divergence noticeable in the synthetic control plot, with a pre-intervention MSPE of approximately 3 for California (MSPE of 6 for the donor pool), which Abadie and colleagues describe as “quite small” and “providing an excellent fit” for the pre-intervention period. Abadie et al. (2010) then added a collection of other variables and discovered that the additional variables had no material effect on the outcome. The De-Prosecution Article intentionally mirrors this well-accepted application of the traditional synthetic control method. Abadie et al. (2010) did not see the need to modify the traditional synthetic control algorithm in this context with bias adjustments, such as the later-conceived augmented synthetic controls.

We would expect some pre-treatment divergence in a synthetic control plot, either positive or negative, because there is no perfect counterfactual for Philadelphia even using synthetic controls. The original synthetic control plot shows small divergences, both positive and negative, between synthetic and real Philadelphia over the course of the pre-period. The only way to avoid this result would be to induce overfitting (the later choice of the Kaplan Response and called out as invalid in the De-Prosecution Article). The result was left in the De-Prosecution Article for complete transparency, as explicitly noted in the original study. In this case, the augmented synthetic control method is not called for, being more likely to confound results than reduce bias.

3.2 | THE KAPLAN RESPONSE MAKES AN EGREGIOUS DATA ERROR

Recognizing the initial basis for the Kaplan Response’s use of the augmented synthetic control method as invalid, it is nevertheless useful to explore the authors’ use of that method. The Kaplan Response claims that they are attempting to use a “bias-corrected” synthetic control model. The authors’ first step is to remove median income as a predictor, a classic economic variable, without any explanation (Kaplan et al., 2022). Instead of bias-correction, the Kaplan Response immediately appears to be engaging in a bias-inducing exercise, foreshadowing a methodological problem. The Kaplan Response’s preferred model, a “bias controlled SCM model,” produces a proposed result associating de-prosecution with -46.22 homicides in Philadelphia. In other words, they are hypothesizing that de-prosecution is associated with 46.22 less homicides per year in Philadelphia. They reach this conclusion despite the fact that during the period in question and beyond, in the real world, Philadelphia homicides were relentlessly moving upwards, even as homicides in non-“progressive” prosecution cities generally remained flat or declined. To engage the illogical results proposed by the Kaplan Response, starting from a baseline of 248 homicides in 2014 and converting their estimating model into a predictor, their model predicts that Philadelphia should have recorded only 18 homicides in 2020 and none (or -28 homicides) in 2021. In fact, there were 499 homicides in 2020 and 561 in 2021. The original De-Prosecution Article associated de-prosecution with an average increase of 74.79 homicides per year in Philadelphia during 2015-19, noting an acceleration to over 100 homicides per year during 2018-19 when de-prosecution was at its most extreme, leading to a credible prediction of where homicides in Philadelphia were headed by 2020-21 (obviously without consideration of the unique circumstances of those years). The stark divergence between the Kaplan Response’s results from a heavily manipulated model and the real world should have given the authors a strong signal that their methodology was seriously flawed.

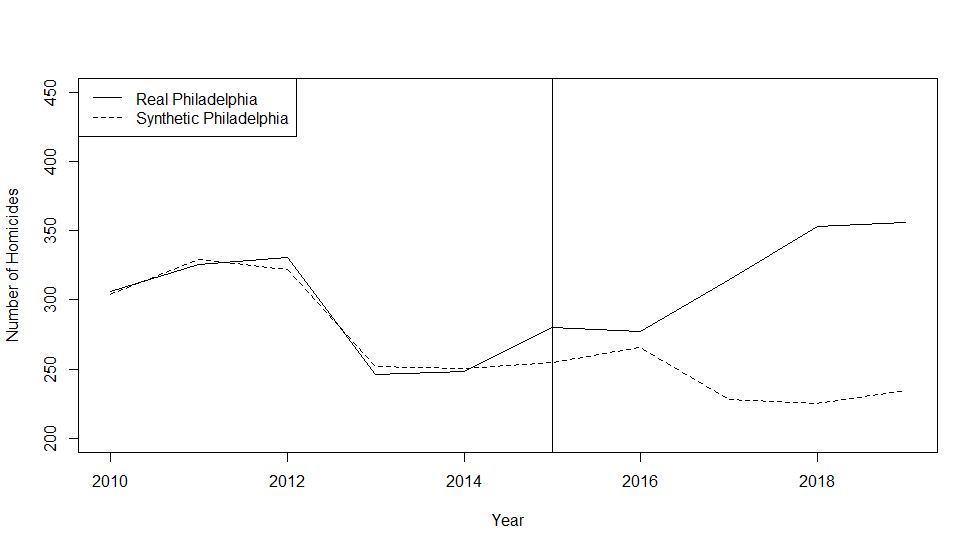

Curious about this divergence between the Kaplan Response’s model and the real world of homicides, as well as the divergence from the results of the De-Prosecution Article using the traditional synthetic control method, I re-ran the model with the original data and the Augsynth package in R as a robustness test. Augsynth is the package in R for application of the augmented synthetic control method. The results utilizing the Augsynth package are shown below in Figure 1:

Figure 1

Augmented Synthetic Control Plot

Philadelphia Homicides Compared to Non-Progressive Cities

Notes: The solid horizontal line shows homicides by year in real Philadelphia. The dashed line shows homicides by year in a counter-factual synthetic Philadelphia. The vertical line at 2015 divides the pre-period and the post-period.

The results using the augmented synthetic control algorithm serve as a robustness test for the original results from the De-Prosecution Article. The Augsynth package shows a statistically significant increase of 83.04 homicides per year associated with de-prosecution in Philadelphia (compared to the original model’s estimate of 74.79 homicides). Like the original model using the traditional synthetic control method, there is a lag period for de-prosecution to begin to have an effect, typical of changes in criminal justice policies. Like the traditional synthetic control model, the augmented synthetic control method shows a large acceleration in homicides during the most intense period of de-prosecution (2018-19). The plots are virtually identical. Given the strong pre-period fit, it makes intuitive sense that a bias-correcting model would show substantially similar results when there was no bias to correct. In short, the augmented synthetic control method using the Augsynth package replicates the logical results of the traditional synthetic control method using the Synth package from the De-Prosecution Article: a large and statistically significant increase in homicides associated with de-prosecution in Philadelphia.

This still left a puzzle regarding how the Kaplan Response manufactured a result that was “flipped” when compared to the De-Prosecution Article and the real-world increases in homicides in Philadelphia. With all other research avenues exhausted, that left the raw data created by the Kaplan Response as the likely culprit.

A review of the data assembled and used by the Kaplan Response revealed some staggeringly large errors that explain how and why they reached their erroneous results. The Kaplan Response stated that their models selected the same donor cities as the De-Prosecution Article models: Detroit, New Orleans, and New York. When addressing the variable of homicide clearances, the Kaplan Response injected homicide clearance data for the donor cities that were incorrect by extremely large percentages.[7]

To use a few examples, the Kaplan Response data reports that donor city New York cleared 0% of its homicides in 2010, 2011, and 2012.[8] New York then rebounds to clear 101% of its homicides in 2017, according to the Kaplan Response authors. The Kaplan Response included gross errors for clearance rates for the other donor cities for their model, such as Detroit with a 16% clearance rate in 2010, an 11% clearance rate in 2011, an 8% clearance rate in 2012, and a 14% clearance rate in 2016. According to the data used by the Kaplan Response, New Orleans only cleared 15% of its homicides in 2012, while Los Angeles (a substitute donor in the robustness modeling) cleared 107% of its homicides in 2013. There are similar errors throughout the clearance data generated and used by the Kaplan Response. As previously noted, the Kaplan Response deleted median income as a variable, further undermining data integrity. The wildly incorrect clearance data then were applied in the augmented synthetic control method preferred by the Kaplan Response, causing the fatal error in its ultimate and bizarre statistical conclusion. The authors of the Kaplan Report have engaged in research conduct that is either remarkably disingenuous or remarkably sloppy.[9]

Once this erroneous data is corrected and the accurate variables are re-introduced into the dataset, the augmented synthetic control method replicates the results of the original model from the De-Prosecution Article, as previously shown above.[10] Having addressed the most salient aspect of the Kaplan Response, the remaining minor issues raised by those authors can be addressed concisely.

4 | OTHER ISSUES RAISED BY THE KAPLAN RESPONSE

4.1 | THE HOMICIDE DATA USED ARE ACCURATE

The Kaplan Response questions the accuracy of the underlying homicide data (Kaplan et al., 2022). However, the homicide data used in the De-Prosecution Article are correct and identified accurately.

As an initial matter, homicides were chosen as the dependent variable because other reported crime data are notoriously inaccurate. The number of other crimes (e.g., aggravated assaults) as reported by any city can vary in accuracy tremendously on a year-to-year basis, is subject to multiple feedback loops, and is virtually impossible to validate independently (Buil-Gil et al., 2021). Homicides, by comparison, are reported consistently, are independent of any police investigative activity, and verifiable (or subject to correction) through public reports.

In the De-Prosecution Article, the very first figure (Figure 1) specifies that the Philadelphia homicides were gathered from the Philadelphia Police Department (Hogan, 2022). The original article then identifies that the rest of the homicide data were gathered from the SHR, and specifically cites the Kaplan-curated database of the SHR data. The original article also notes that some cities required acquiring the data independently, such as cities in Florida, which do not contribute to the SHR but maintain their own collection of homicide data through the Florida Department of Law Enforcement.[11] The process used for compiling the homicide data was to use the SHR data, but spot-check for any obvious inconsistencies or missing data. The SHR proves to be relatively accurate, as a sampling of 2020 homicide data demonstrates in Table 1:[12]

Table 1

Sampling of Homicide Totals for 2020

City SHR Police/Public Data Source

New York 468 462

Chicago 771 774

San Antonio 130 128

San Jose 40 40

Detroit 325 327

San Francisco 48 48

The Kaplan Response takes the position that the homicide data cannot be trusted because they may be inaccurate and the authors are not sure from where the data were acquired. This complaint is misguided because the De-Prosecution article clearly identifies the source of the homicide data. It is doubly misguided because the original article cites to and is based on SHR data curated by the primary author of the Kaplan Response, Jacob Kaplan. Essentially, the Kaplan Response is criticizing Kaplan’s data. Moreover, the Kaplan Response was able to replicate the results of the De-Prosecution Article precisely using the same homicide data, and then apparently bases all of its further alternative models on the exact same homicide data used by the De-Prosecution Article. The De-Prosecution Article went through the additional step of doing a manual spot-check against publicly available sources of homicide data to verify accuracy, a practice that the Kaplan Response appears to have neglected for their homicide and clearance data. Simply put, the homicide data used in the De-Prosecution Article are accurate, and certainly more reliable than most data used in crime studies.[13]

4.2 | THE KAPLAN RESPONSE’S ATTEMPT TO USE RATES IS MISINFORMED

The Kaplan Response’s next attack is to argue that homicide rates should be used instead of the level measurement of homicides (i.e., the raw number of homicides) (Kaplan et al., 2022). The De-Prosecution article expressly notes that Abadie et al. (2021) stated that use of rates in an improper context will cause overfitting, rendering results invalid. Recognizing this problem, the original study used the raw number of homicides in the main model,[14] but exhibited a rate-based model in the robustness section. In that alternative model, the De-Prosecution Article demonstrated the exact overfitting issue warned about by Abadie, noted that the trend was still towards de-prosecution being associated with large increases in homicides, and used an example to show exactly how data can be manipulated to look significant and thus should not be used (Hogan, 2022).

The Kaplan Response attempts to use homicide rates, moves the treatment date to different years, and argues that altering the treatment date while using rates undermines the original work. The Kaplan Response misses the entire point that using homicide rates results in overfitting, and thus ends up criticizing the exact methodology and results that the De-Prosecution Article noted were invalid. The Kaplan Response also complains about the use of 2017 as the treatment date for this robustness testing, failing to note that the whole point of that brief section was to expose an inappropriate result. In this already fatally flawed section of the Kaplan Response, the authors interestingly fail to test 2018-19 as the post-period, the exact period when District Attorney Krasner was in office and de-prosecution was at its most extreme.

Finally and most importantly, hidden in a footnote, the Kaplan Response admits that even when they engage in such selective data choices, when re-estimating the model based on their own selected parameters and using homicide rates, they estimate an increase in the homicide rate of 5.37 per 100,000 (p=0.002) for Philadelphia, even larger than the invalid rate-based model calculated and called out in the De-Prosecution Article (4.06 homicides/100,000, p=0.037). With a Philadelphia population in excess of 1.5 million, the Kaplan Response is suggesting an annualized increase of 80.55 homicides per year in Philadelphia associated with de-prosecution, consistent with the other modeling in both the original De-Prosecution Article and the Kaplan Response replication. Thus, the Kaplan Response validates points from the De-Prosecution Article about the problem with using homicide rates,[15] then inadvertently demonstrates that the homicide trends noted in the De-Prosecution Article are robust even when using flawed methodology.

4.3 | EXPANDING THE PRE-PERIOD IS MISGUIDED

The Kaplan Response suggests that the results of the De-Prosecution Article would not be valid unless the pre-period for the synthetic control model is expanded (Kaplan et al., 2022). This argument is theoretically interesting, but ultimately incorrect because the Kaplan Response fails to recognize the contextual, real-world influences on statistical modeling. In addition, even applying this suggestion serves as further proof that the original results are robust.

The De-Prosecution Article uses a pre-period of 2010-14 and a post-period of 2015-19. The 2015-19 post-period was triggered by the data indicating the break-point for the beginning of de-prosecution in 2015, then bounded by 2019 based on the available data and the decision to exclude 2020-21 because of the potentially confounding effects of the Covid-19 pandemic and murder of George Floyd. The latter point about avoiding major confounding variables is a crucial example of applying real-world context to constrain statistical modeling. The main model in the De-Prosecution Article then used 2010-14 as the pre-period. With five years of post-period data, five years of pre-period data is intuitively appealing as providing balanced and sufficient data, while offering a reasonable measure of statistical power. In addition, the year 2010 was a natural cut-off for the pre-intervention period because it coincided with District Attorney Seth Williams taking office in Philadelphia, allowing a clean break from the previous prosecutorial regimes.

The Kaplan Response is unsatisfied with the 2010-14 pre-period, demanding that the pre-intervention period begin somewhere from 2000-09. The Kaplan Response then re-runs the synthetic control method varying the start of the pre-period for every year from 2000-09, but not identifying the donor cities to see if the selections make any sense. However, every alternative model run in the Kaplan Response to expand the pre-period shows large increases in homicides in Philadelphia associated with de-prosecution, varying from an additional 26 homicides per year to an additional 74 homicides per year. Additionally, the Kaplan Response concedes that every estimate using a pre-period of 2005 or later is statistically significant.[16] This demonstrates that the specific homicide differential may be time-sensitive, an unsurprising result, but the trend is always positive, large, and consistent with the original specification. As with their independent replication of the preferred results for the De-Prosecution Article, the authors of the Kaplan Response should be complimented, this time for performing a robustness test that I did not consider, but which buttresses the original conclusions.

Ultimately, when demanding a longer pre-period, the authors of the Kaplan Response fail to take into consideration some real-world constraints on valid statistical modeling. The De-Prosecution Article excluded 2020-21 from the post-period because of the potentially confounding influences of the pandemic and George Floyd murder, concerned that significant national-level events could bias estimates. It is necessary to be alert to confounding factors in the pre-intervention period as well (Bonander et al., 2021). In the Kaplan Response, forcing the pre-intervention period to earlier years introduces major confounding historical variables. Two immediate major confounders ignored in the Kaplan Response are the Great Recession of 2008-09 and the 9/11 terrorist attacks of 2001, both of which have been explored in detail for potential effects on crime, and both of which are squarely within the Kaplan Response’s proposed pre-intervention period (Davis et al., 2010; Finklea, 2011; LaFree & Dugan, 2015; Light et al., 2019; Ghosh, 2018; Nguyen, 2021; Rosenfeld, 2014; Rosenfeld & Fornango, 2007; Wilson, 2011; Wolff et al., 2014). The Kaplan Response could keep moving backwards through time, ignoring wars, depressions, and/or prior epidemics, and the authors would find that the modeling results vary across time. The choice of 2010-14 as the pre-period was not an accident, but a balanced attempt to use sufficient pre-intervention data while respecting the real-life limitations on choosing an uncompromised time period for comparison. All researchers must respect potential historical confounders in constructing pre- and post-intervention periods when conducting statistical modeling.

4.4 | REDUCING THE NUMBER OF DONOR CITIES IS UNSOUND

Another critique from the Kaplan Response is that the donor pool should be restricted to cities with populations similar to Philadelphia.[17] The authors contend that when such a restriction is added, the results of the De-Prosecution Article no longer demonstrate statistical significance (Kaplan et al., 2022). In making this argument, the Kaplan Response is ignoring basic statistical facts and prior valid examples.[18]

For any DiD analysis, including synthetic controls, it is not the population match that matters. The necessary conditions are parallel trends during the pre-intervention period and the lack of confounders (Abadie, 2021; Bonander et al., 2021; Bouttell et al., 2018; Kreif et al., 2016). This is foundational statistical methodology, covered in any basic course on causal inference.

Turning again to the classic tobacco study by Abadie et al. (2010) as a reference point, that analysis used the 50 states in the United States as the units of comparison. California was the treatment state. Once excluding potentially treated states, the study used 38 remaining states as potential donors. The treatment state of California had a population of approximately 37 million in 2010, much larger than any potential donor state (U.S. Census, 2010). The range of population within the potential donor states was tremendous, as Texas had a population of 25 million, while Wyoming had a population of approximately 500,000 (U.S. Census, 2010). None of this troubled Abadie and colleagues, because they were matching on trends within the units, not simply population. By comparison, the De-Prosecution Article uses twice as many units (100 cities instead of 50 states), and the population range within the units is much smaller than the range in Abadie et al. (2010) (approximately 37 million v. 500,000 in the states compared to approximately 8.8 million v. 200,000 in the cities). Again, these comparisons are statistically irrelevant because it is the parallel trends that are important in any DiD analysis.

The Kaplan Response’s attempt to show that reducing the number of cities in the donor pool renders the original results not statistically significant is mechanically obvious and meaningless. Reducing the donor pool to five cities will have the mechanical effect of destroying any statistical power for the study (the smallest possible p-value from that donor pool would be 0.2). This is not a robustness test, but a statistical truism. The only plausible reason for the Kaplan Response to suggest restricting the number of cities is to reduce the potential statistical power of the analysis, a form of reverse p-hacking. It is akin to saying that artificially reducing the number of homicides in Philadelphia might lead to a non-statistically significant result, which is another tactic attempted by the Kaplan Response. Nevertheless, a review of the Kaplan Response shows that including the top 30 largest cities or any greater number of the most populous cities in the United States as a potential donor pool yields statistically significant DiD results in the range of 50-100 increased homicides in Philadelphia, resulting in another strong robustness test verifying the original results. The Kaplan Response again validates the robustness of the results from the De-Prosecution Article.

If the Kaplan Response wishes to engage in a serious argument about matching city populations and other issues in examining the impact of prosecutorial policies, the authors might wish to apply their free time to a study initially presented over a year ago, the only other study to have addressed “progressive” prosecution and homicides. In Agan et al. (2022), the authors claimed to be studying the impact of the election of progressive prosecutors on crime rates, and concluded, “We . . . find no significant effect of these prosecutors on serious crimes, including homicide.” However, a closer reading of Agan et al. (2022) reveals a number of what the Kaplan Response would call “fatal flaws.” On the issue of matching cities with similar populations, Agan et al. (2022) mix together jurisdictions as populous as Los Angeles (population 3.9 million) with Pittsfield, MA (population 43,000). Agan and colleagues claim to have gathered their data directly from the prosecutors’ offices, but neither supply the data publicly nor use data from standardized and verifiable databases. Agan et al. (2022) claim to have obtained a list of progressive prosecutors from a nonprofit organization, without ever identifying the organization or performing any internal validation of the factors used to select the progressive prosecutors, leaving open the possibility that the authors of the Kaplan Response might accuse Agan and colleagues of simply cherry-picking low crime jurisdictions.[19] Agan and co-authors use a pre-intervention period of only 12 months, not the 15 years demanded by the Kaplan Response. Agan et al. (2022) use a post-intervention period of only 24 months, failing to consider the necessary lag time for a criminal justice policy to take effect. The authors of Agan et al. (2022) include in their post-intervention analysis data for 2020-21, data that are likely aberrational and biased by the pandemic and George Floyd protests. Agan et al. (2022) include in their analysis data from the prosecutorial regime of Alonzo Payne – who took office as district attorney only in 2021 – and integrated data into their overall results based on a pre-period of merely four months before he took office and a post-period of just seven months while he was in office (Payne has since resigned amid a pending recall election and an investigation regarding violations of victims’ rights). The Agan co-authors failed to establish a control group of prosecutors for the sake of comparison, resulting in the fact that their analysis compares the progressive regime of since-recalled San Francisco District Attorney Chesa Boudin to his predecessor’s policies, the progressive regime of San Francisco District Attorney George Gascón, with the latter then also included in the study as the progressive Los Angeles District Attorney. Accordingly, Boudin is compared to Gascón, and Gascón’s regimes are used as both a pre-treatment and post-treatment unit. The actual results of Agan et al. (2022) are that they lacked sufficient data to reach any conclusions, much less the confident conclusion that progressive prosecutors have no effect on “serious crimes, including homicide.”[20]

The authors of the Kaplan Response undoubtedly were aware of the Agan et al. (2022) study, however they did absolutely nothing to analyze or critique it. It may be that the authors of the Kaplan Response reserve their ire, energy, and statistical gymnastics for articles where the results disagree with their personal beliefs about prosecutorial strategies.

5 | UPDATED PARALLEL RESEARCH AND REAL-WORLD OBSERVATIONS

One of the important functions of studying a dynamic system is to update any analysis with parallel research and real-world observations. Since the drafting of the De-Prosecution Article, there have been advances in similar research to serve as a theoretical robustness test for the conclusions advanced in the original study. In addition, a large-scale natural experiment provides evidence supporting the theory that de-prosecution has a causal effect on homicides.

The parallel research is interesting because the researchers probably did not realize that they were implicitly studying de-prosecution. Johnson and Roman (2022) examined the impact of Covid-19 on shooting dynamics in the Kensington neighborhood of Philadelphia. The study found that the proliferation of drug markets associated with the pandemic were structurally and causally related to a measurable increase in shooting rates in the neighborhood, noting that “disrupting drug organizations, or otherwise curbing violence stemming from drug markets, may go a long way towards quelling citywide increases in gun violence.” The conclusion from Johnson and Roman (2022) about the necessity of disrupting drug organizations in order to quell gun violence is equally applicable to the impact of the Philadelphia DAO de-prosecuting drug trafficking crimes from 2015-19. Piza and Connealy (2022) studied the effect of a policing-free zone established in Seattle during George Floyd-related protests in 2020. Using a clever micro-synthetic control analysis, that study found that the absence of police led to a significant increase in crime in the police-free zone and then spilling over into surrounding areas. While Piza and Connealy (2022) were specifically studying the effect of de-policing, they also managed to study the effect of de-prosecution, since the absence of any policing also signaled the absence of prosecution. Finally, Kennedy (2022) engaged in an analysis of homicide offenders in Baltimore during the period of 2015-22, coinciding with the tenure of a de-prosecution regime in that city, and determined that a significant number of homicides in Baltimore would have been prevented if the defendants had been incapacitated for their prior offenses through prosecutions resulting in standard guideline sentences. Kennedy (2022) supplies detailed descriptive data for one mechanical aspect of the impact of de-prosecution on homicides.

The natural experiment testing de-prosecution was provided by the Covid-19 pandemic. In 2020-21, the criminal justice system was interrupted by pandemic-related shutdowns. Consider these shutdowns to be a nationwide, involuntary de-prosecution regime. The de-prosecution theory posits that large-scale reductions in prosecutions across felonies and misdemeanors would have a causal impact on increasing homicides. Consistent with the de-prosecution theory, the United States saw an almost 30% increase in homicides in 2020, affecting almost every major city. This was the largest one-year spike in homicides in recorded American history, and homicides continued to increase in 2021 while prosecutions remained sidelined. There are many potential confounding factors to address (e.g., the murder of George Floyd, differential pandemic responses across regions, etc.), but this trend is strongly suggestive of the causal link between de-prosecution and homicides. De-prosecution provides a straightforward mechanical explanation for the rise in homicides during 2020-21, as opposed to the more fragile logic of alternative causal theories. An early descriptive analysis already has examined the fact that a prosecutor’s office which was able to resume prosecutions earlier and at a greater scale had some anecdotal success in beginning to reduce violent crime, specifically homicides, when compared to a prosecutor’s office which remained not prosecuting due to the pandemic (MacGillis, 2022). This is an issue which merits further consideration and analysis.

6 | CONCLUSION

Once the methodological and data errors are corrected in the Kaplan Response, the substantive impact of that manuscript is to serve as a strong replication of and expanded robustness testing for the De-Prosecution Article. The authors of the Kaplan Response, while making at least one remarkable data error that will be difficult to forget, have reinforced the conclusion that broadscale de-prosecution as used in Philadelphia is having a causal effect on increasing homicides in that city. Chief prosecutors in large cities would be well-served to consider the results of the De-Prosecution Article. The statistic of 74.79 homicides per year is not just a number, but represents actual murder victims, usually in the most disadvantaged sections of American cities.

When beginning this research, my expectation was that the results of the original study would show nothing statistically significant, based on what I considered to be a lack of sufficient post-period data (which can be confirmed by the academics whom I consulted during the course of the study). To stay conservative, I employed a two-sided test throughout, whereas a one-sided test of statistical significance could be justified. The reviewers and editors for the original study demanded rigorous robustness testing (not all of which could be included in the final article due to length constraints). The synthetic control method is designed to reduce subjective inputs from researchers, as specifically noted by Abadie et al. (2010).

The authors of the Kaplan Response make a bold accusation of cherry-picking data and methods. To the contrary, the De-Prosecution Article discussed various ways of assessing the data and applied different methods, analyzing the accuracy of the underlying data and suitability of each method. The original article did not shy away from presenting potentially contrary results, such as when demonstrating the lack of any apparent causal effect on robbery and burglary offenses. The original study followed the descriptive data to set the breakpoint for de-prosecution at 2015, years prior to the election of District Attorney Larry Krasner, despite the fact that choosing 2017 or 2018 as the treatment date yielded a larger DiD result (89.35-99.81 homicides) and attributing the increases solely to Krasner’s regime would have drawn more attention. In retrospect, the Kaplan Response complaining about the data and methodology in the original De-Prosecution Article is ironic.

Criminology is at its best when sifting through complex and unexplored data, then objectively applying the results of that analysis to difficult problems in the criminal justice system. Vigorous and informed debate is welcome. I look forward to continuing to refine this research and engaging with other people who are interested in examining hard questions.

References

Abadie, A., Diamond, A. & Hainmueller, J. (2010). Synthetic Control Methods for Comparative Case Studies: Estimating the Effect of California’s Tobacco Control Program. Journal of the American Statistical Association, 105(490):493–505.

Abadie, A. (2021). Using Synthetic Controls: Feasibility, Data Requirements, and Methodological Aspects. Journal of Economic Literature, 59(2):391–425.

Abadie, A. & L’Hour, J. (2021). A Penalized Synthetic Control Estimator for Disaggregated Data. Journal of the American Statistical Association, 116(536):1789-1803.

Agan, A., Doleac, J. & Harvey, A. (2022). Prosecutorial Reform and Local Crime Rates. Working Paper.

Avdija, A., Gallagher, C. & Woods D. (2022). Homicide Clearance Rates in the United States, 1976-2017: Examining Homicide Clearance Rates Relative to the Situational Circumstances in Which They Occur. Journal of Violence Vict., 37(1):101-115.

Bonander, C., Humphreys, D. & Degli Esposti, M. (2021). Synthetic Control Methods for the Evaluation of Single-Unit Interventions in Epidemiology: A Tutorial. American Journal of Epidemiology, 190(12):2700–2711.

Bouttell, J., Craig, P., Lewsey, J., Robinson, M. & Popham, F. (2018). Synthetic Control Methodology as a Tool for Evaluating Population-Level Health Interventions. Journal of Epidemiology and Community Health, 72(8):673-678.

Braga, A. (2021). Improving Police Clearance Rates of Shootings: A Review of the Evidence. Manhattan Institute Report, 1-15.

Buil-Gil, D., Moretti, A. & Langton, S. (2021). The Accuracy of Crime Statistics: Assessing the Impact of Police Data Bias on Geographic Crime Analysis. Journal of Experimental Criminology, https://doi.org/10.1007/s11292-021-09457-y.

Davis, L., Pollard, M., Ward, K., Wilson, J., Varda, D., Hansell, L. & Steinberg, P. (2010, December). Long-Term Effects of Law Enforcement's Post-9/11 Focus on Counterterrorism and Homeland Security. Rand Corporation and U.S. Department of Justice Office of Justice Programs, NCJ Number 232791, 1-177.

Finklea, K. (2010, December 22). Organized Crime in the United States: Trends and Issues for Congress. Congressional Research Service, 1-28.

Ghosh, P. (2018). The Short-Run Effects of the Great Recession on Crime. Journal of Economics, Race, and Policy, 1:92–111.

Hogan, T. (2022). De-Prosecution and Death: A Synthetic Control Analysis of the Impact of De-Prosecution on Homicides. Criminology & Public Policy (forthcoming).

Johnson, N. & Roman, C. (2022). Community Correlates of Change: A Mixed-Effects Assessment of Shooting Dynamics During COVID-19. PLOS ONE 17(2):e0263777.

Kaplan, J. (2021). Uniform Crime Reporting (UCR) Program Data: Supplementary Homicide Reports, 1976-2018. Ann Arbor, MI: Inter-university Consortium for Political and Social Research [distributor], 2021-01-16.

Kaplan, J., Naddeo, J. & Scott, T. (2022). De-Prosecution and Death: A Comment on the Fatal Flaws in Hogan (2022). Working Paper.

Kennedy, S. (2022). Baltimore’s Preventable Murders: The Role of Prior Convictions and Sentencing in Future Homicides. Maryland Public Policy Institute Report, 1-7.

Kreif, N., Grieve, R., Hangartner, D., Turner, A. J., Nikolova, S. & Sutton, M. (2016). Examination of the Synthetic Control Method for Evaluating Health Policies with Multiple Treated Units. Health Economics, 25:1514– 1528.

LaFree, G. & Dugan, L. (2015). How Has Criminology Contributed to the Study of Terrorism since 9/11? Sociology of Crime, Law and Deviance, 20:1-23.

Law Enforcement Legal Defense Fund (2022, June). Justice For Sale: How George Soros Put Radical Prosecutors in Power, 1-17.

Lepola, J. (2020, July 23). The Political Ties to Travel. WBFF(Baltimore).

Light, M., Massoglia, M. & Dinsmore, E. (2019). How Do Criminal Courts Respond in Times of Crisis? Evidence from 9/11. American Journal of Sociology, 125(2):485–533.

MacGillis, A. (2022, July 19). Two Cities Took Different Approaches to Pandemic Court Closures. They Got Different Results. ProPublica.

Nguyen, T. (2021). The Effectiveness of White‐Collar Crime Enforcement: Evidence from the War on Terror. Journal of Accounting Research, 59(1), https://doi.org/10.1111/1475-679X.12343.

Parast, L., Hunt, P., Griffin, B. & Powell, D. (2020). When Is a Match Sufficient? A Score-Based Balance Metric for the Synthetic Control Method. Journal of Causal Inference, 8:209-228.

Piza, E., & Connealy, N. (2022). The Effect of the Seattle Police-Free CHOP Zone on Crime: A Microsynthetic Control Evaluation. Criminology & Public Policy, 21:35–58.

Philadelphia Police Department Crime Maps & Stats, phillypolice.com/crime-maps-stats (2022).

Rosenfeld, R. (2014). Crime and the Great Recession: Introduction to the Special Issue. Journal of Contemporary Criminal Justice, 30(1):4-6.

Rosenfeld, R. & Fornango, R. (2007). The Impact of Economic Conditions on Robbery and Property Crime: The Role of Consumer Sentiment. Criminology, 45:735-769.

Soros, G. (2022, July 31). Why I Support Reform Prosecutors. Wall Street Journal.

U.S. Census Bureau (2010, 2020).

Wilson, J. (2011). Crime and the Great Recession. City Journal.

Wolff, K., Cochran, J. & Baumer, E. (2014). Reevaluating Foreclosure Effects on Crime During the "Great Recession". Journal of Contemporary Criminal Justice, 30(1):41-69.

[1] The De-Prosecution Article was published by Criminology & Public Policy on July 7, 2022 (https://onlinelibrary.wiley.com/doi/epdf/10.1111/1745-9133.12597). On July 25, 2022, the Kaplan Response was posted by the three authors – Jacob Kaplan (Princeton University), J.J. Naddeo (Georgetown University and Georgetown University Law Center), and Tom Scott (RTI International) – on Twitter and linked to a publicly accessible document (https://drive.google.com/file/d/12aZDxYC7MUkZHwORkCECHNWV-SOei0nx/view).

[2] The effect size is driven by two trend lines: (1) the notable increase in homicides in real Philadelphia; and (2) the algorithm calculates that homicides in synthetic Philadelphia would have continued to decline from 2015-19 (absent de-prosecution), consistent with the trends in the matched donor cities.

[3] The main author of the Kaplan Response, Jacob Kaplan, curates a database with the SHR data and until recently was working directly with the Philadelphia District Attorney’s Office (DAO), and thus must have known that this discrepancy exists. In a later section of the Kaplan Response, the authors admit that they were aware of this data discrepancy. Kaplan also failed to disclose his affiliation with the Philadelphia DAO anywhere in the Kaplan Response, not including any statement of interests.

[4] The Kaplan Response complains that they were not given access to the compiled data, underlying data, synthetic control code, and cleaning code used by the De-Prosecution Article. I am still using the data and code in research involving 2020 and 2021; after consultation with senior academics, the general advice was that researchers usually do not share their data for ongoing research. In order to avoid researchers making the same error regarding Philadelphia homicides as the Kaplan Response, I will post the correct homicide data publicly, with the complete dataset posted when I am finished analyzing 2020 and 2021 results.

[5] The Kaplan Response professes not to understand how New York City, composed of five boroughs, was classified as “middle.” Three of the boroughs were identified as “traditional” or “middle,” while two of the boroughs were identified as “progressive,” yielding an aggregate classification of “middle.”

[6] The Kaplan Response also is misreading the original synthetic control plot. Because the study uses yearly data, the marker at year 2015 has the algorithm measuring backwards through 2014-15. If anything, real Philadelphia lies below synthetic Philadelphia during the final pre-treatment year of 2014, demonstrating the conservative nature of the methodology. It should also be noted that there is no official consensus on what exactly constitutes a good fit for a pre-intervention period, leaving researchers to identify the differential mathematically and employ common sense, although new methods have been discussed to address the issue of fit (Bouttell et al., 2018; Parast et al., 2020).

[7] The model uses both homicide clearances by raw numbers and homicide rates as variables. The Kaplan Response duplicated their errors accurately for both the raw numbers and rates.

[8] For a point of reference, the publicly available data on homicide clearances in New York City for 2010-12 show clearance rates in the range of 60-70% (Braga, 2021). Employing an independent and extremely conservative analysis, I estimated that the New York homicide clearance rates for the same period were 52-59%. There is no historical record in any medium or format of New York failing to clear any homicides during 2010-12 (with the exception of the Kaplan Response).

[9] Perhaps if the authors of the Kaplan Response had not been so quick to publish their findings via social media, instead subjecting themselves to rigorous peer review by the editors and reviewers at Criminology & Public Policy, they could have spared themselves this embarrassment. For other researchers using the newly introduced augmented synthetic control method, the Kaplan Response serves as a cautionary tale about the sensitivity of that method to poorly specified variables.

[10] The erroneous homicide clearance data assembled by the Kaplan authors did not affect the Kaplan Response’s replication of the results from the De-Prosecution Article because the clearance data was not assigned any weight under the traditional synthetic control method.

[11] Baltimore and Birmingham regularly require manual checks because of missing or unreported data, although both cities were excluded from the main results in the De-Prosecution Article as potentially treated cities. Hialeah does not report to the SHR and is within the borders of Miami-Dade county, and thus is assigned duplicate homicide data. Other cities required occasional corrections, with verifications through multiple sources (e.g., police, media, or researcher databases).

[12] For those interested, Los Angeles, Atlanta, and Baltimore are cities that require significant corrections for reported 2020 SHR data (as well as Philadelphia).

[13] The main model used in the De-Prosecution Article also used population, median income, clearance data, and prosecutor classification data. The Kaplan Response does not challenge the population and economic data. The Kaplan Response does not address the prosecutor classification data, merely copying it from the original study (albeit without the yearly variations). Regarding the clearance data, the Kaplan Response correctly ascertained that the De-Prosecution Article relied upon demographic information for offenders to calculate the clearance data, a practice that the Kaplan Response explicitly notes is acceptable, and eventually concedes that the data are accurate as calculated in the De-Prosecution Article (Kaplan et al., 2022; Avdija et al., 2021). Unfortunately, the authors of the Kaplan Response failed to check their own clearance data. In the alternative models, the De-Prosecution Article used additional economic data, other crimes data (with the caveat such data are potentially unreliable), and administrative data. The Kaplan Response did not challenge any of the latter data.

[14] The level estimate of homicides is most robust to the problem of overfitting, which is why such data are used in the preferred model of the current study. Population is not a confounder unless it is related to treatment timing, and there is no suggestion that there was a mass migration from Philadelphia during the proposed treatment range.

[15] After consigning their rate-based main results to a footnote, the Kaplan Response then briefly mentions in a figure note the fact that their rate-based analysis also suffered from overfitting as warned about by Abadie et al. (2021), and thus is invalid (“Note: Weight placed on all donors, suggesting results should not be interpreted as true effect.”). Once again, the authors of the Kaplan Response are forced to agree with the conclusions of the De-Prosecution Article.

[16] When the pre-period is extended even farther back in time, the estimates become noisier but the magnitude of the effects remain large and consistent with the original specification.

[17] Philadelphia is the sixth largest city in the United States, not the fifth largest as claimed by the Kaplan Response (U.S. Census, 2020).

[18] The Kaplan Response also raises a brief point about the potential differences between homicide numbers in counties and cities, where the city may not be co-terminous with the county. Earlier drafts of the De-Prosecution Article addressed this exact point and monitored the data for consistency; the reference was deleted in later versions to save space and as an obvious point. Given that the Kaplan Response successfully replicated the results of the De-Prosecution Article precisely using the traditional synthetic control method, it is apparent that the authors of that response used the same analysis to differentiate city-level homicide data from county-level data. Moreover, comparing a county with 500,000 citizens but no major cities to a county composed entirely of a major city certainly would cause validity concerns.

[19] Utilizing a little common sense, public source research, and based on prior experience, my belief is that Agan et al. (2022) used a list from and collaborated with the organization Fair and Just Prosecution in their study. This list substantively replicated the extensive, multi-factor review that I performed for the De-Prosecution Article.

[20] George Soros referenced the Agan et al. (2022) study in an op-ed in the Wall Street Journal explaining why he supports progressive prosecutors, describing it as a “rigorous academic study” (Soros, 2022). Soros reportedly also provides funding to Fair and Just Prosecution (Lepola, 2020; LELDF, 2022). While this may raise a host of potential conflict of interest questions, the statement of Soros validates the De-Prosecution Article’s decision to use Soros funding as a factor in determining who qualifies as a “progressive” prosecutor for purposes of establishing a variable and excluding de-prosecuting jurisdictions from the potential donor pool.